CAD model designed in SolidWorks.

3D printed on Bambu Labs A1.

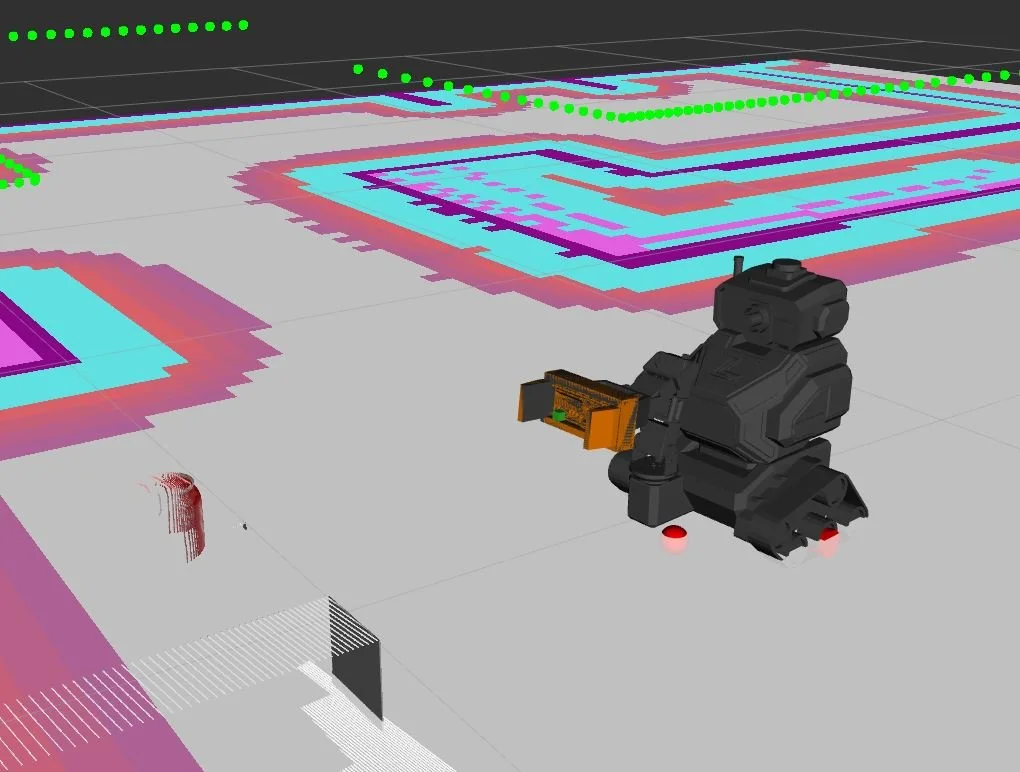

Live sensor data displayed in Rviz.

Wilson

Github Repository - Try the simulation!

An ai powered robot for autonomous beverage retrieval

Wilson is a robot designed to autonomously retrieve your beverage of choice from a mini-fridge. Built on a differential-drive base with a 4-DOF manipulator, Wilson runs on ROS 2 and was developed using Gazebo simulations to accelerate testing and deployment. At its core, the robot uses the Gemini Live API to handle low-latency, bi-directional voice communication and to intelligently decide when to invoke its navigation, perception, and manipulation tools. This allows you to simply say, “Wilson, can I have a Coke?” and have the Wilson handle everything from planning to delivery. Wilson’s mechanical components and body were custom designed in SolidWorks and fabricated in-house (my house) on a Bambu Labs A1 3D printer.

Hardware

Differential-drive base with a 4-DOF manipulator.

Raspberry Pi 5 (8 GB) main computer.

Two Arduino Nano microcontrollers for motor and servo control.

LD-19 LiDAR for navigation and mapping.

ArduCam ToF camera for depth perception.

USB camera for visual recognition.

Custom soft 3D force sensor in the gripper (developed with University of Kansas researchers).

Custom parallel gripper mechanism and body fully designed in SolidWorks and 3D-printed.

2 GA37-520 geared DC motors with encoders and L298 motor driver.

5 20 kg digital servos.

3S LiPo battery.

How It Works (See DEMO below)

You ask Wilson for an ice cold beverage of your choosing.

Gemini Live interprets the request and decides which tools Wilson should use.

Wilson plans a path to the mini-fridge with Nav2 tool.

Wilson sends a command to open the motorized mini fridge door.

RGB and depth cameras detect and localize the requested drink.

Wilson uses the MoveIt tool to plan and execute the grasp.

Wilson navigates back and delivers the beverage to you.

FUTURE WORK

Develop MoveIt tools for Gemini to plan manipulation.

Incorporate soft 3D force sensors for force controlled grasping (Courtesy of Dr. Jonathan Miller, University of Kansas/AxioForce).

Integrate the ros-mcp-server with Gemini to expand Wilson’s tool use capabilities within ROS2.

This simulation demonstrates Wilson’s ability to respond to natural language input with Google’s Gemini Live multimodal AI model. Gemini receives the command and determines how to use custom tools to complete the task I have given him, in this case, navigating to the living room.